In POMDPs, information about the hidden state, delivered through observations, is both valuable to the agent, allowing it to base its actions on better informed internal states, and a “curse”, exploding the size and diversity of the internal state space. One attempt to deal with this is to focus on reactive policies, that only base their actions on the most recent observation. However, even reactive policies can be demanding on resources, and agents need to pay selective attention to only some of the information available to them in observations.

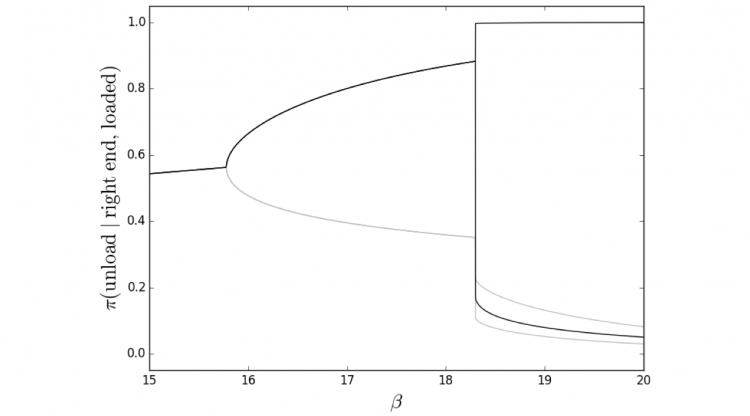

In this report we present the minimum-information principle for selective attention in reactive agents. We further motivate this approach by reducing the general problem of optimal control in POMDPs, to reactive control with complex observations. Lastly, we explore a newly discovered phenomenon of this optimization process — period doubling bifurcations. This necessitates periodic policies, and raises many more questions regarding stability, periodicity and chaos in optimal control.