Imitation Learning of Hierarchical Programs via Variational Inference

Roy Fox*, Richard Shin*, Pieter Abbeel, Ken Goldberg, Dawn Song, and Ion Stoica

Neural Abstract Machines & Program Induction workshop (NAMPI @ ICML), 2018

The design of controllers that operate in dynamical systems to perform specified tasks has traditionally been manual. Machine learning algorithms enable data-driven generation of controllers, also called policies or programs, and differ in how a user may convey what task the controller should perform. In Imitation Learning (IL), the user demonstrates a supervisor control signal in a set of execution traces, and the objective is to train from this data a controller that performs the computation correctly on unseen inputs.

This paper takes a hierarchical imitation learning approach to program synthesis. We model the controller as a set of Parametrized Hierarchical Procedures (PHPs) (Fox et al., 2018), each of which can invoke a sub-procedure, take a control action, or terminate and return to its caller. The PHP model maintains a similar call-stack to that of Neural Programmers–Interpreters (NPI) (Reed & De Freitas, 2015; Li et al., 2016), but makes discrete and interpretable procedure calls, rather than smoothing over continuous values.

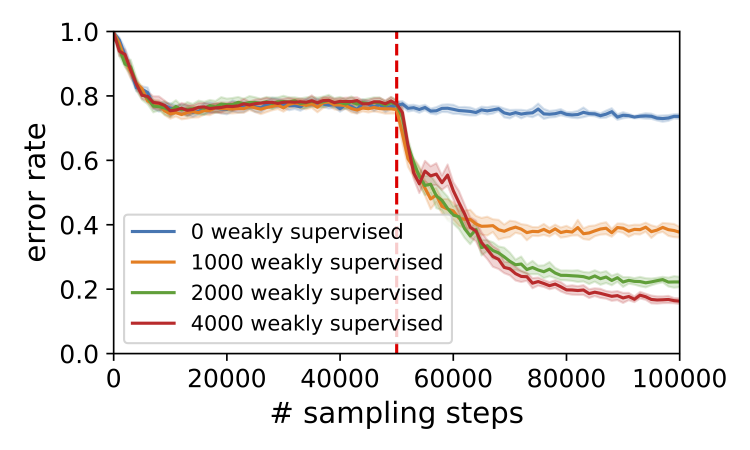

We consider a dataset of weakly supervised demonstrations, in which the user provides observation–action trajectories executed by an expert controller. Inferring hierarchical structure from weak supervision in which the structure is never observed is challenging, particularly with deep multi-level hierarchies. To facilitate discovery of the structure we augment the dataset with strongly supervised demonstrations of not only the control actions to take, but also the internal structure of the program flow that led to these actions. Training from a mixture of strongly and weakly supervised trajectories can discover highly informative structures from few strongly supervised trajectories, and leverage these structures in learning models with good generalization from a larger amount of weakly supervised trajectories.

We propose to use autoencoders to train hierarchical procedures from weakly supervised data, a method that showed success in unsupervised learning (Baldi, 2012; Kingma & Welling, 2013; Rezende et al., 2014; Zhang et al., 2017). We present a novel method of stochastic variational inference (SVI), where a hierarchical inference model is trained to approximate the posterior distribution of the latent hierarchical structure given the observable traces, and used to impute the call-stack of hierarchical procedures and guide the training of the generative model, i.e. the procedures themselves.

The contributions of this paper are: (1) extending the PHP model with procedures that take arguments; (2) a hierarchical variational inference method for training PHPs from weak supervision. Our method generalizes Stochastic Recurrent Neural Networks (SRNNs) (Fraccaro et al., 2016) to hierarchical controllers. Compared to level-wise hierarchical training via the Expectation–Gradient (EG) method (Fox et al., 2018), our SVI approach applies to deeper hierarchies and to procedures that take arguments.