Multi-Task Learning via Task Multi-Clustering

Andy Yan, Xin Wang, Ion Stoica, Joseph Gonzalez, and Roy Fox

Adaptive & Multitask Learning workshop (AMTL @ ICML), 2019

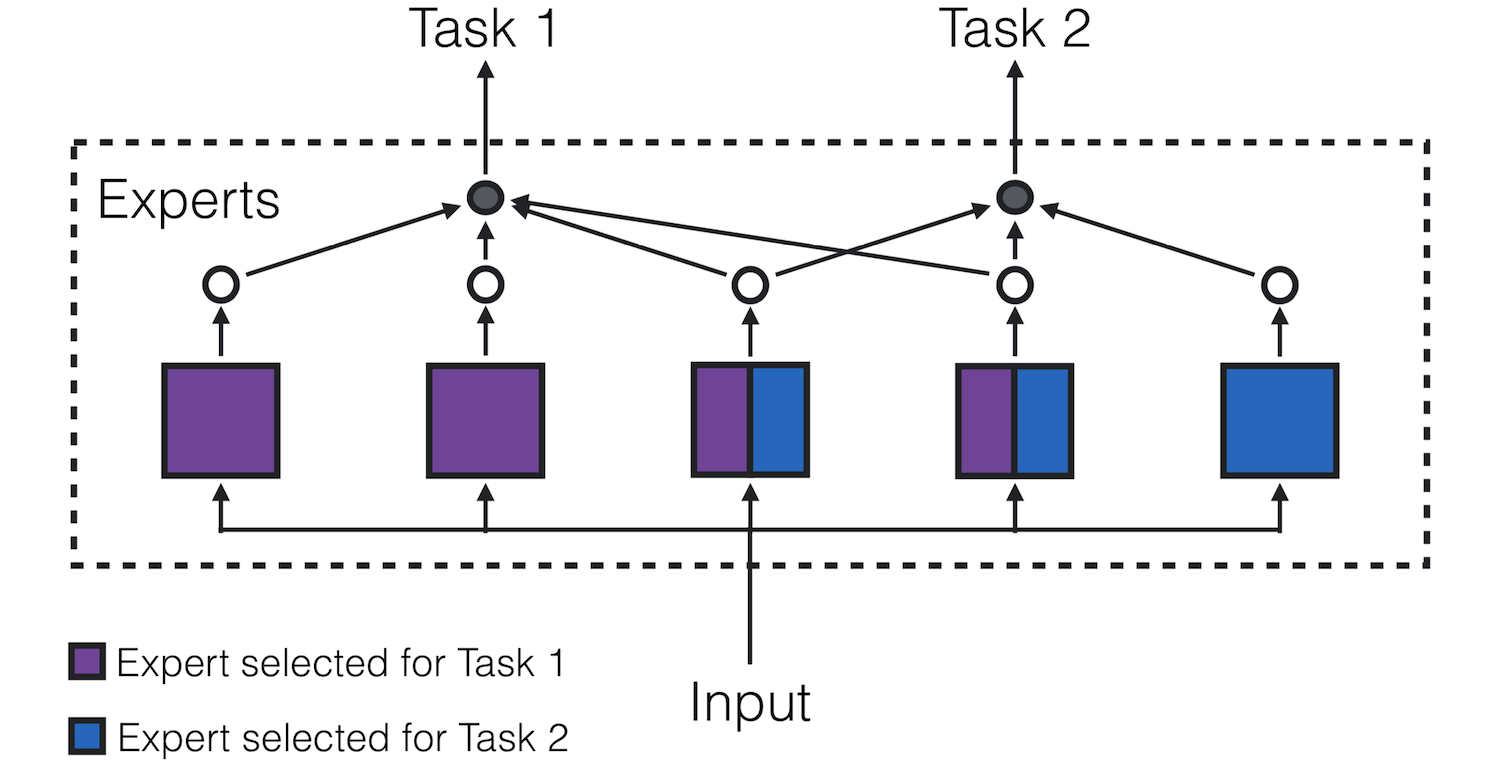

Multi-task learning has the potential to facilitate learning of shared representations between tasks, leading to better task performance. Some sets of tasks are related, and can share many features that are useful latent representations for these tasks. Other sets of tasks are less related, possibly sharing some features, but also competing on the representational resources of shared parameters. We propose to discover how to share parameters between related tasks and split parameters between conflicting tasks, by learning a multi-clustering of the tasks. We present a mixture-of-experts model, where each cluster is an expert that extracts a feature vector from the input, and each task belongs to a set of clusters whose experts it can mix. In experiments on the CIFAR-100 MTL domain, multi-clustering outperforms a model that mixes all experts in accuracy and computation time. The results suggest that the performance of our method is robust to regularization that increases the model’s sparsity when sufficient data is available, and can benefit from sparser models as data becomes scarcer.